2025-08-25 teletext in north america

I have an ongoing fascination with "interactive TV": a series of efforts, starting in the 1990s and continuing today, to drag the humble living room television into the world of the computer. One of the big appeals of interactive TV was adoption, the average household had a TV long before the average household had a computer. So, it seems like interactive TV services should have proliferated before personal computers, at least following the logic that many in the industry did at the time.

This wasn't untrue! In the UK, for example, Ceefax was a widespread success by the 1980s. In general, TV-based teletext systems were pretty common in Europe. In North America, they never had much of an impact---but not for lack of trying. In fact, there were multiple competing efforts at teletext in the US and Canada, and it may very well have been the sheer number of independent efforts that sunk the whole idea. But let's start at the beginning.

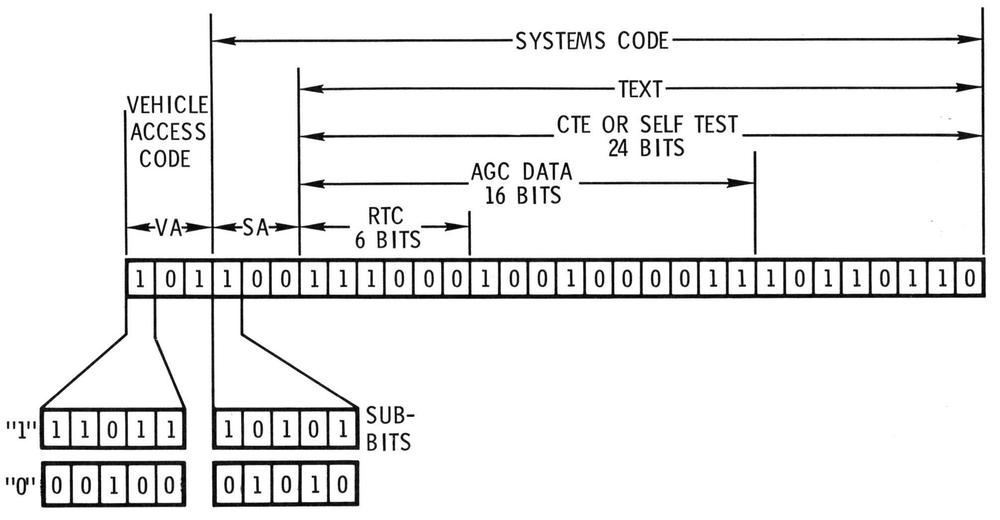

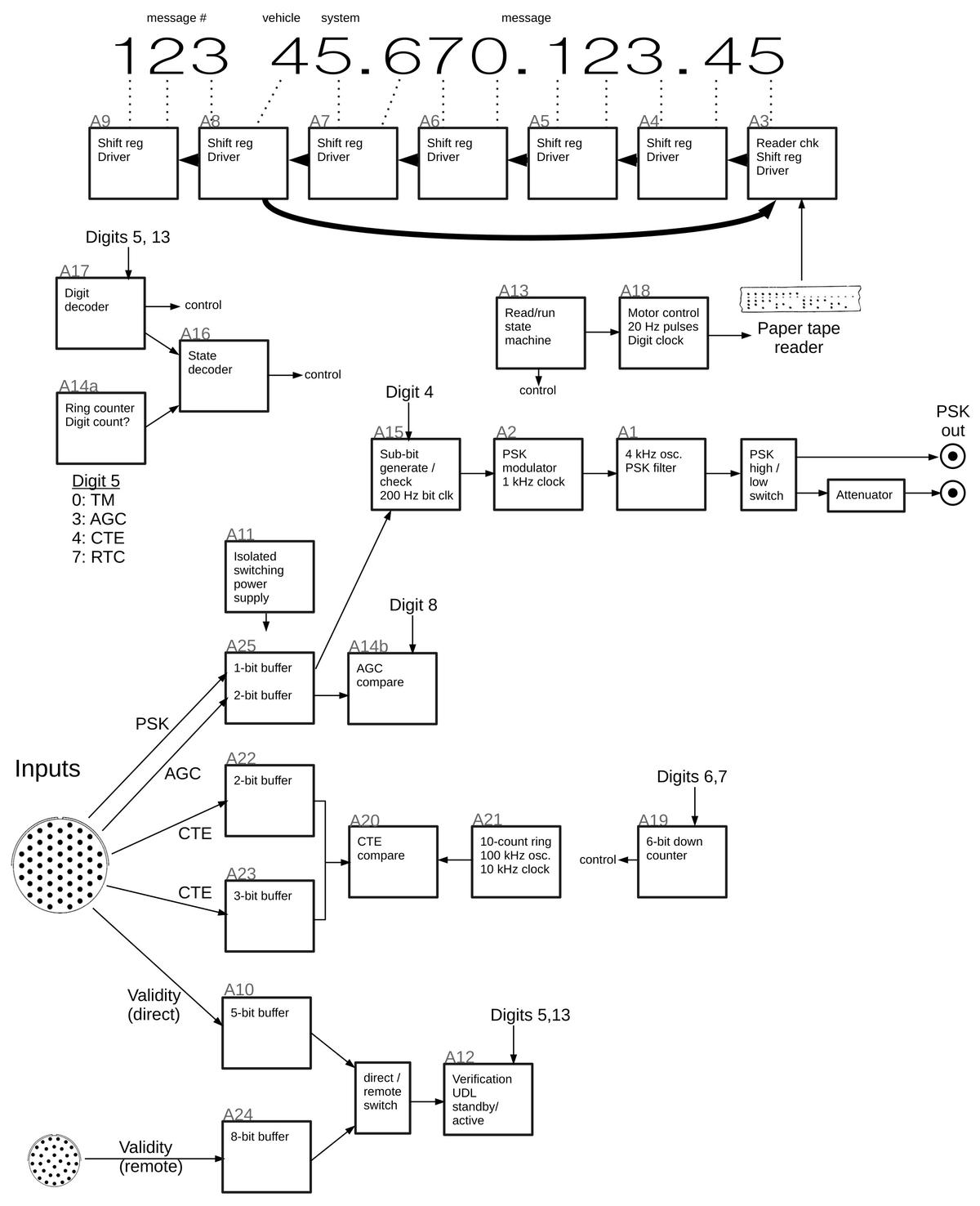

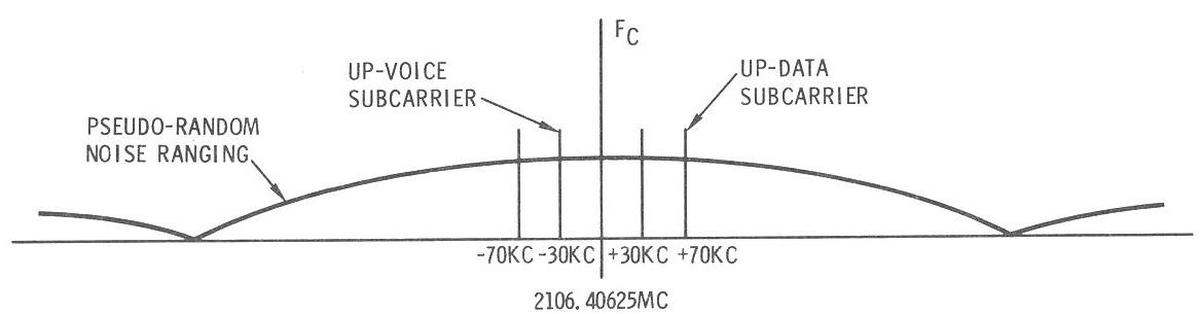

The BBC went live with Ceefax in 1974, the culmination of years of prototype development and test broadcasts over the BBC network. Ceefax was quickly joined by other teletext standards in Europe, and the concept enjoyed a high level of adoption. This must have caught the attention of many in the television industry on this side of the ocean, but it was Bonneville International that first bit [1]. Its premier holding, KSL-TV of Salt Lake City, has an influence larger than its name suggests: KSL was carried by an extensive repeater network and reached a large portion of the population throughout the Mountain States. Because of the wide reach of KSL and the even wider reach of the religion that relied on Bonneville for communications, Bonneville was also an early innovator in satellite distribution of television and data. These were ingredients that made for a promising teletext network, one that could quickly reach a large audience and expand to broader television networks through satellite distribution.

KSL applied to the FCC for an experimental license to broadcast teletext in addition to its television signal, and received it in June of 1978. I am finding some confusion in the historical record over whether KSL adopted the BBC's Ceefax protocol or the competing ORACLE, used in the UK by the independent broadcasters. A 1982 paper on KSL's experiment confusingly says they used "the British CEEFAX/Oracle," but then in the next sentence the author gives the first years of service for Ceefax and ORACLE the wrong way around, so I think it's safe to say that they were just generally confused. I think I know the reason why: in the late '70s, the British broadcasters were developing something called World System Teletext (WST), a new common standard based on aspects of both Ceefax and ORACLE. Although WST wasn't quite final in 1978, I believe that what KSL adopted was actually a draft of WST.

That actually hints at an interesting detail which becomes important to these proposals: in Europe, where teletext thrived, there were usually not very many TV channels. The US's highly competitive media landscape lead to a proliferation of different TV networks, and local operations in addition. It was a far cry from the UK, for example, where 1982 saw the introduction of a fourth channel called, well, Channel 4. By contrast, Salt Lake City viewers with cable were picking from over a dozen channels in 1982, and that wasn't an especially crowded media market. This difference in the industry, between a few major nationwide channels and a longer list of often local ones, has widespread ramifications on how UK and US television technology evolved.

One of them is that, in the UK, space in the VBI to transmit data became a hotly contested commodity. By the '80s, obtaining a line of the VBI on any UK network to use for your new datacasting scheme involved a bidding war with your potential competitors, not unlike the way spectrum was allocated in the US. Teletext schemes were made and broken by the outcomes of these auctions. Over here, there was a long list of television channels and on most of them only a single line of the VBI was in use for data (line 21 for closed captions). You might think this would create fertile ground for VBI-based services, but it also posed a challenge: the market was extensively fractured. You could not win a BBC or IBA VBI allocation and then have nationwide coverage, you would have to negotiate such a deal with a long list of TV stations and then likely provide your own infrastructure for injecting the signal.

In short, this seems to be one of the main reasons for the huge difference in teletext adoption between Europe and North America: throughout Europe, broadcasting tended to be quite centralized, which made it difficult to get your foot in the door but very easy to reach a large customer base once you had. In the US, it was easier to get started, but you had to fight for each market area. "Critical mass" was very hard to achieve [2].

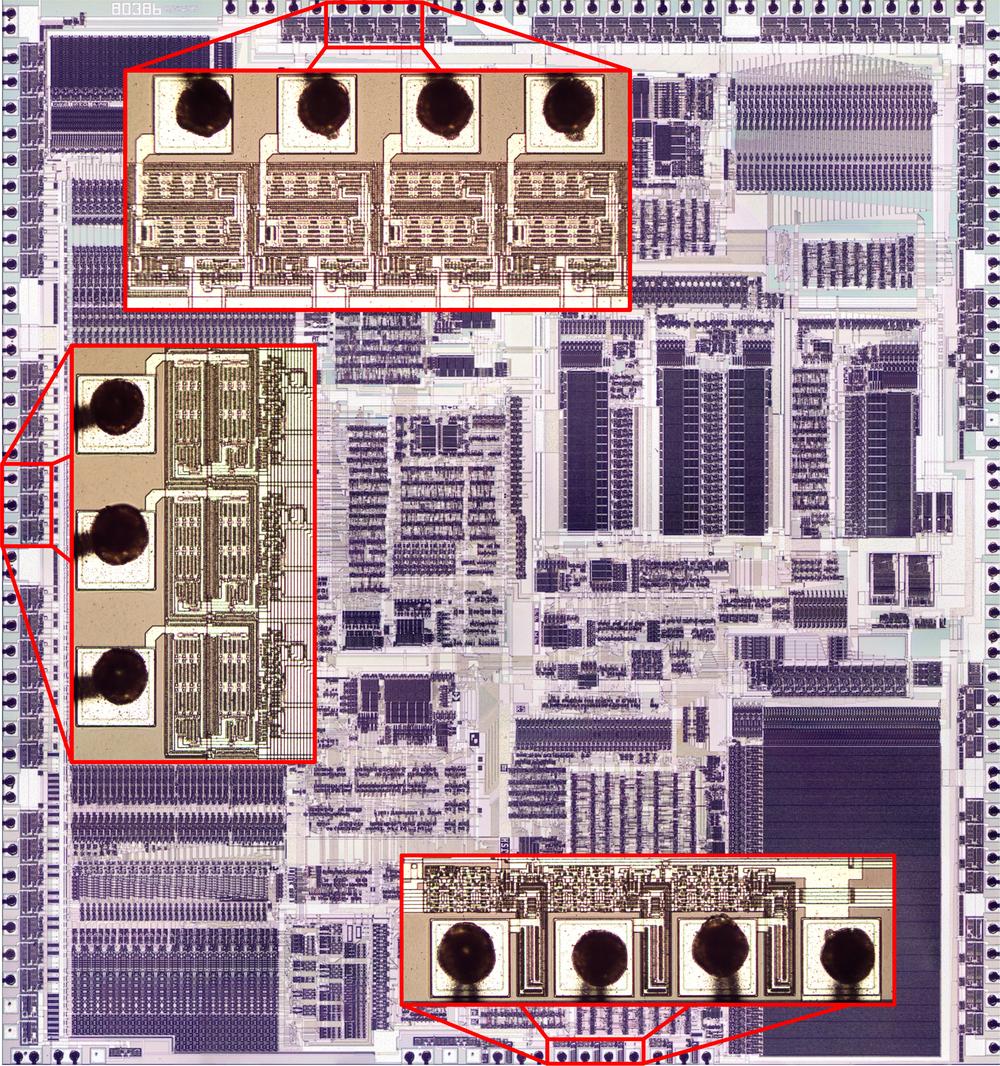

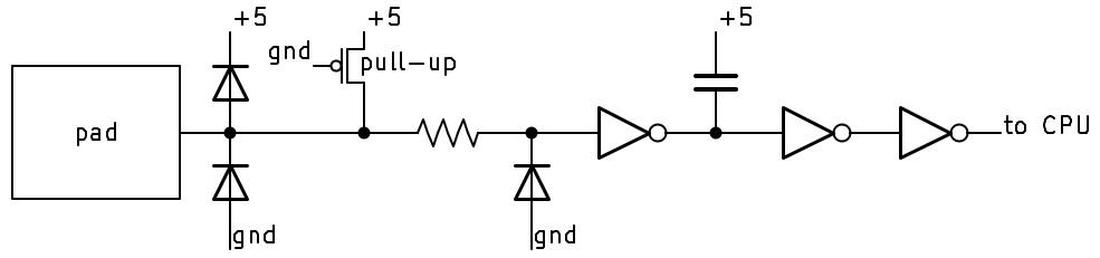

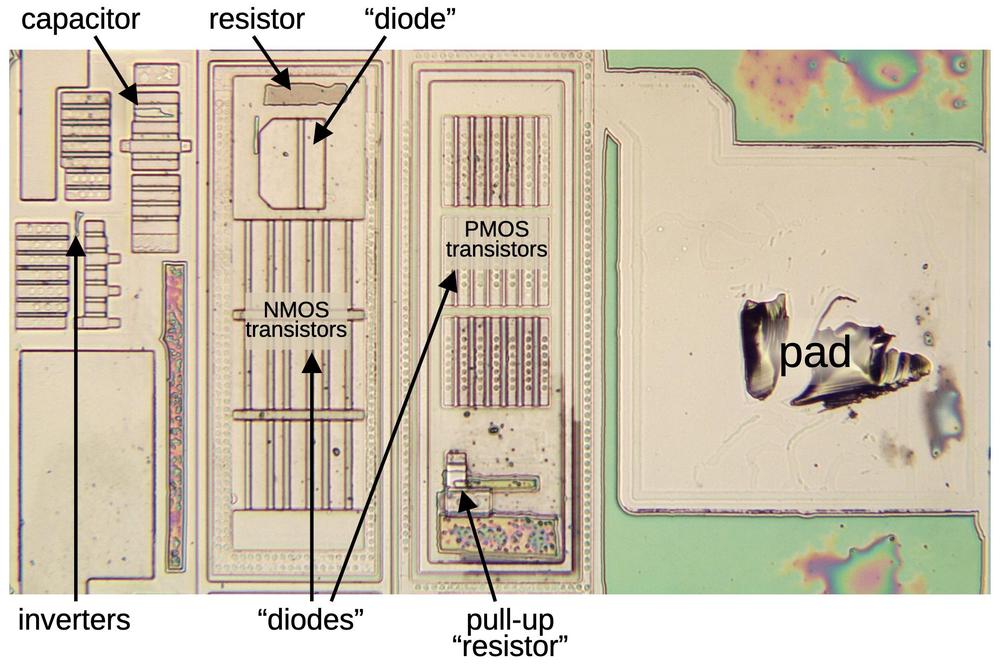

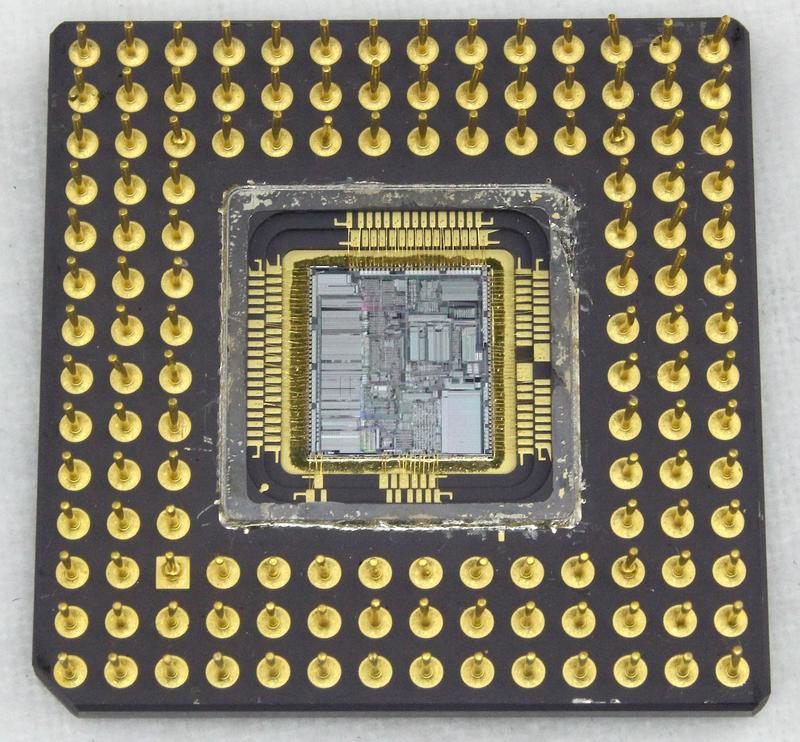

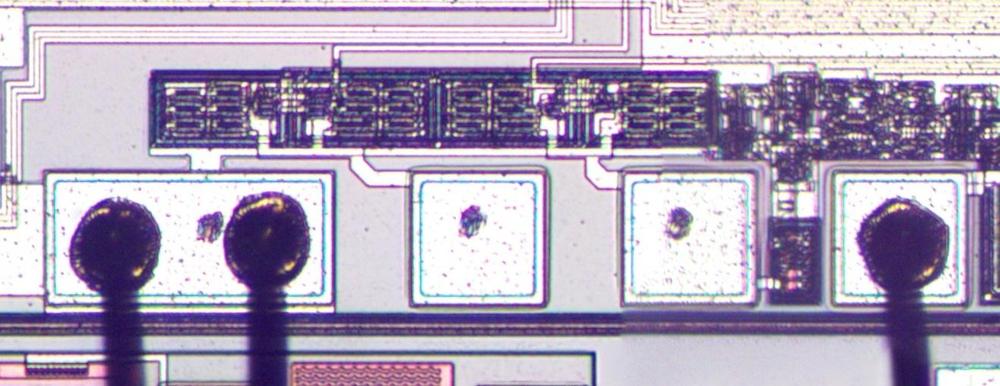

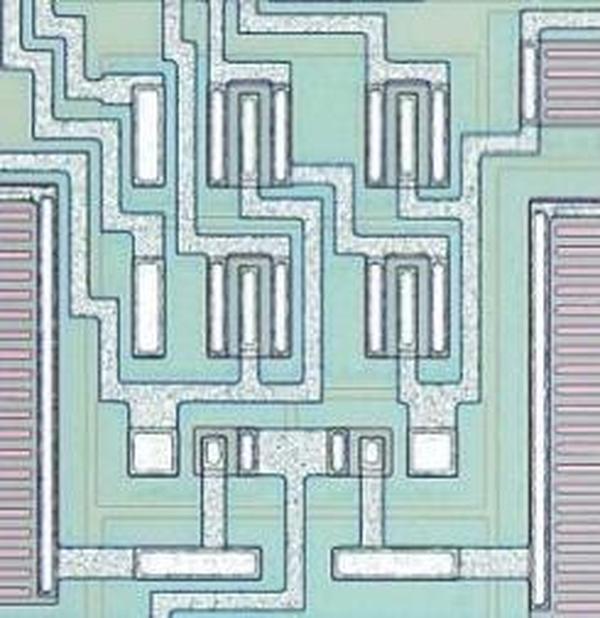

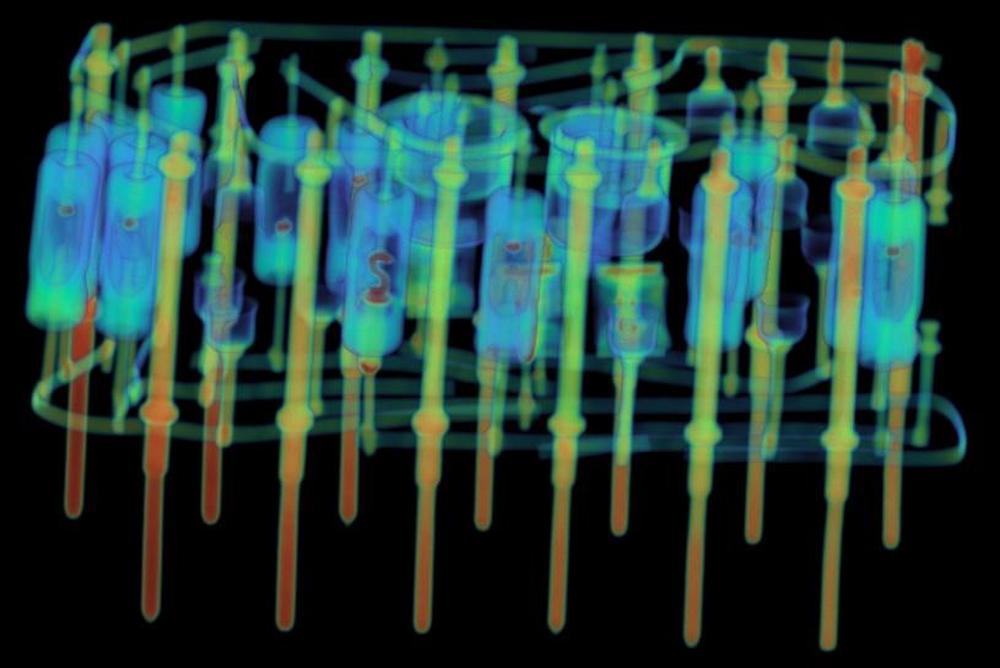

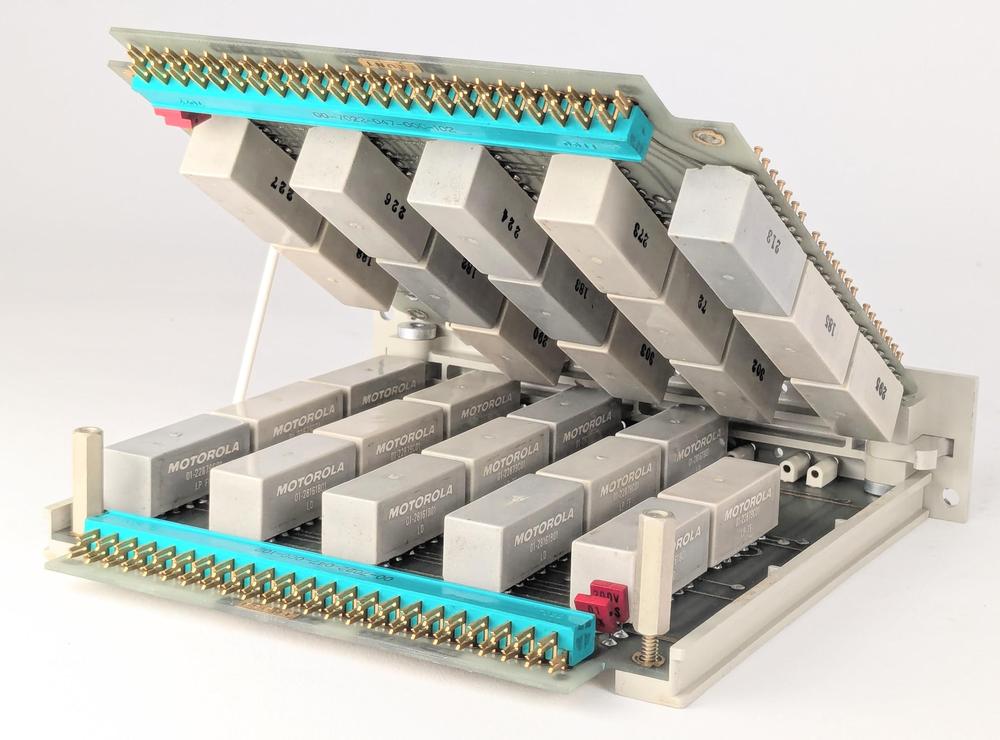

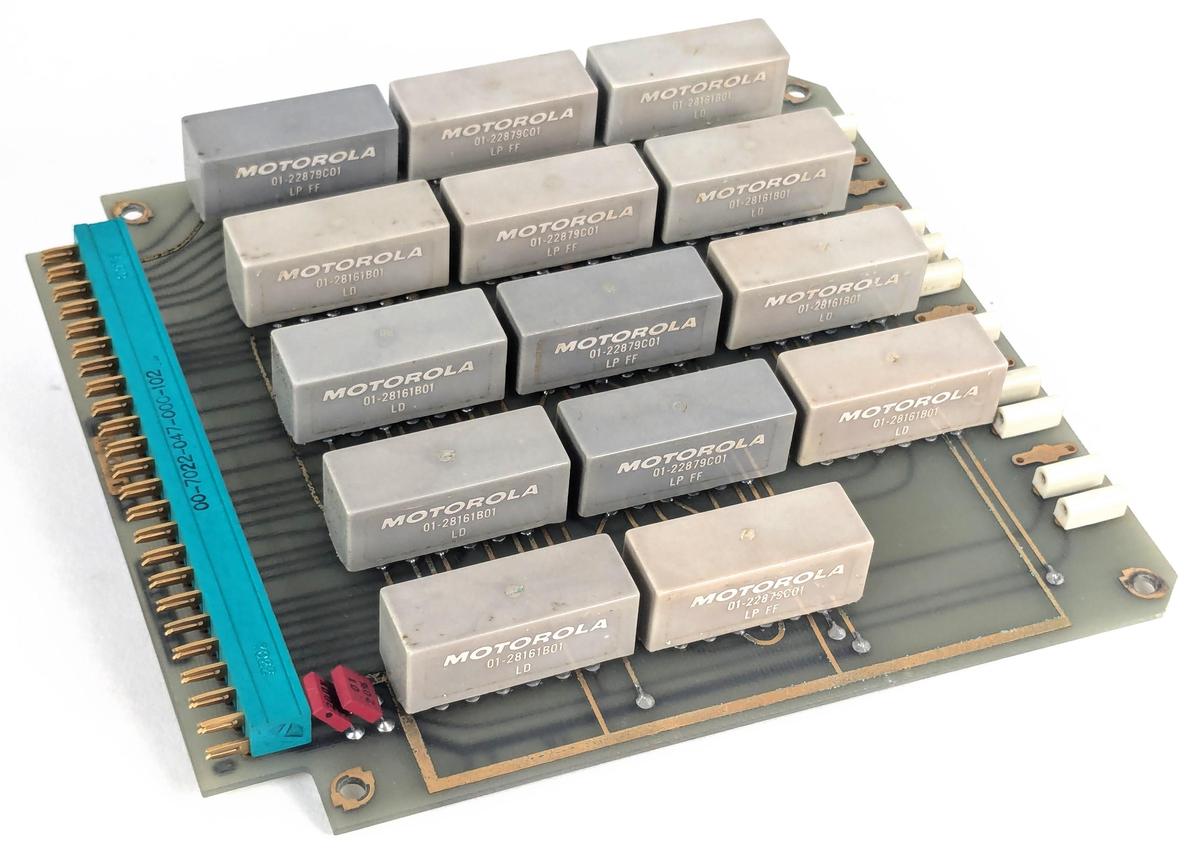

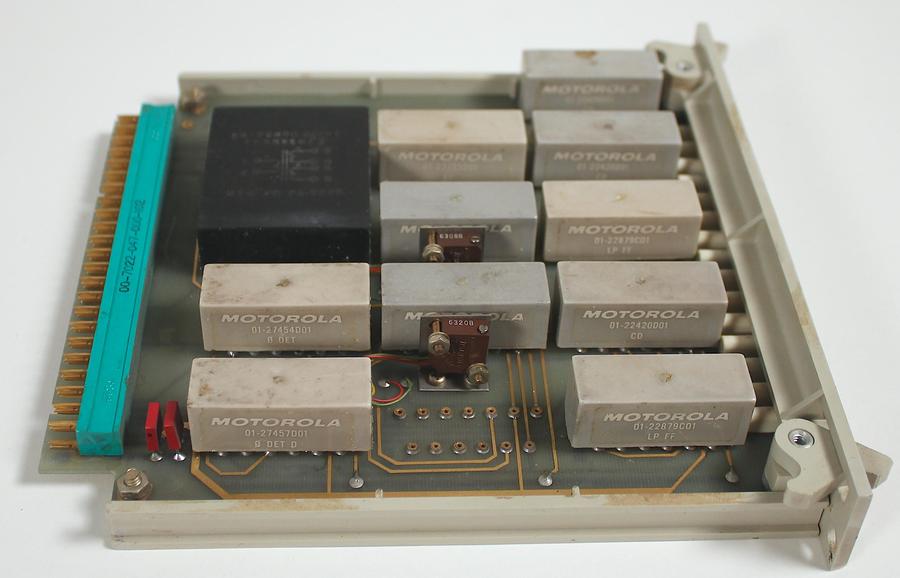

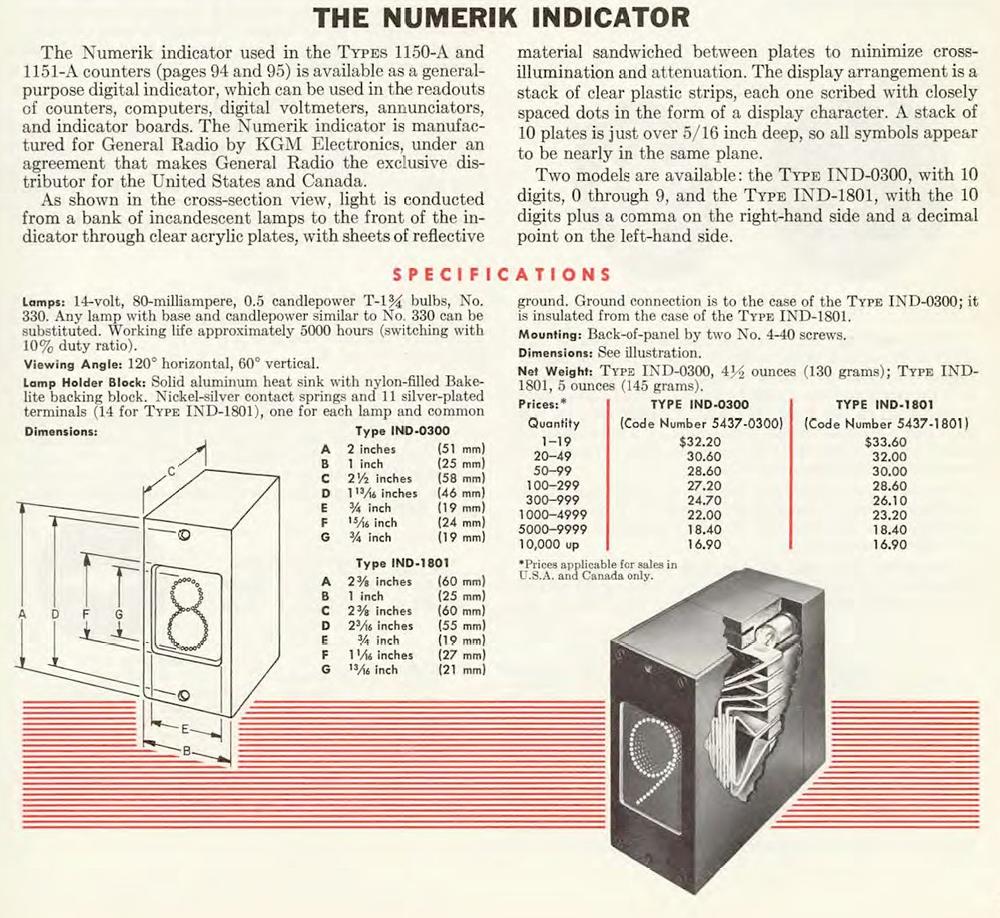

Back at KSL, $40,000 (~$200,000 today) bought a General Automation computer and Tektronix NTSC signal generator that made up the broadcast system. The computer could manage as many as 800 pages of 20x32 teletext, but KSL launched with 120. Texas Instruments assisted KSL in modifying thirty television sets with a new decoder board and a wired remote control for page selection. This setup, very similar to teletext sets in Europe, nearly doubled the price of the TV set. This likely would have become a problem later on, but for the pilot stage, KSL provided the modified sets gratis to their 30 test households.

One of the selling points of teletext in Europe was its ability to provide real-time data. Things like sports scores and stock quotations could be quickly updated in teletext, and news headlines could make it to teletext before the next TV news broadcast. Of course, collecting all that data and preparing it as teletext pages required either a substantial investment in automation or a staff of typists. At the pilot stage, KSL opted for neither, so much of the information that KSL provided was out-of-date. It was very much a prototype. Over time, KSL invested more in the system. In 1979, for example, KSL partnered with the National Weather Service to bring real-time weather updates to teletext---all automatically via the NWS's computerized system called AFOS.

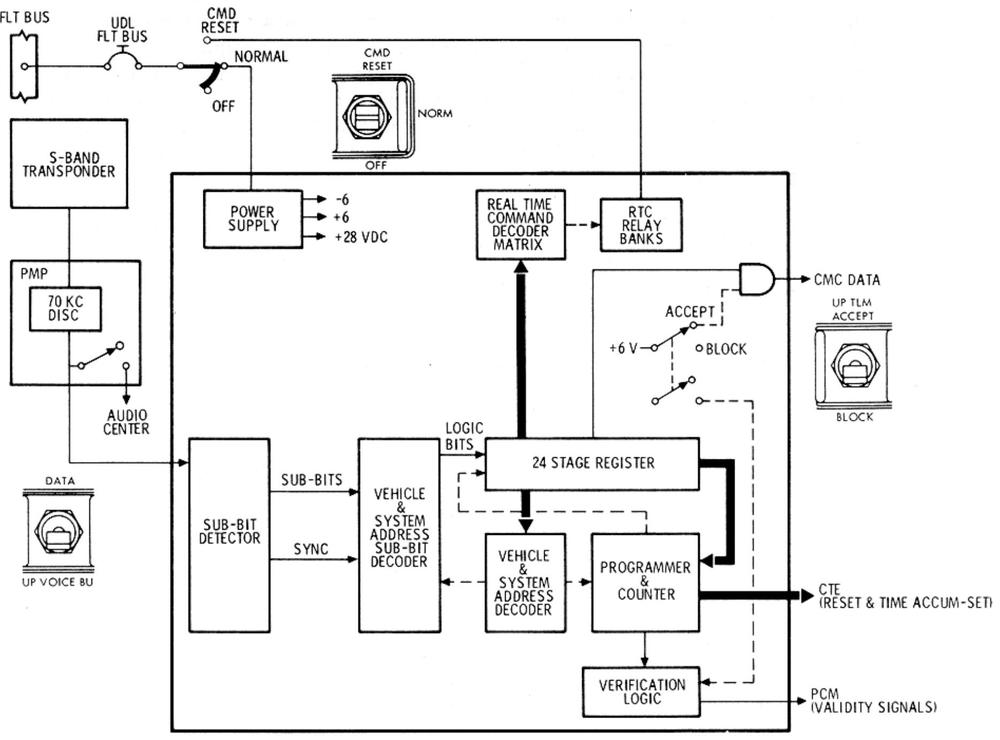

At that time, KSL was still operating under an experimental license, one that didn't allow them to onboard customers beyond their 30-set test market. The goal was to demonstrate the technology and its compatibility with the broader ecosystem. In 1980, the FCC granted a similar experimental license to CBS affiliated KMOX in St. Louis, who started a similar pilot effort using a French system called Antiope. Over the following few years, the FCC allowed expansion of this test to other CBS affiliates including KNXT in Los Angeles. To emphasize the educational and practical value of teletext (and no doubt attract another funding source), CBS partnered with Los Angeles PBS affiliate KCET who carried their own Teletext programming with a characteristic slant towards enrichment. Meanwhile, in Chicago, station WFLD introduced a teletext service called Keyfax, built on Ceefax technology as a joint venture with Honeywell and telecom company Centel. Despite the lack of consumer availability, teletext was becoming a crowded field---and for the sake of narrative simplicity I am leaving out a whole set of other North American ventures right now.

In 1983, there were at least a half dozen stations broadcasting teletext based on British or French technology, and yet, there were zero teletext decoders on the US market. Besides their use of an experimental license, the teletext pilot projects were constrained by the need for largely custom prototype decoders integrated into customer's television sets. Broadcast executives promised the price could come down to $25, but the modifications actually available continued to cost in the hundreds. The director of public affairs at KSL, asked about this odd conundrum of a nearly five-year-old service that you could not buy, pointed out that electronics manufacturers were hesitant to mass produce an inexpensive teletext decoder as long as it was unclear which of several standards would prevail. The reason that no one used teletext, then, was in part the sheer number of different teletext efforts underway. And, of course, things were looking pretty evenly split: CBS had fully endorsed the French-derived system, and was a major nationwide TV network. But most non-network stations with teletext projects had gone the British route. In terms of broadcast channels, it was looking about 50/50.

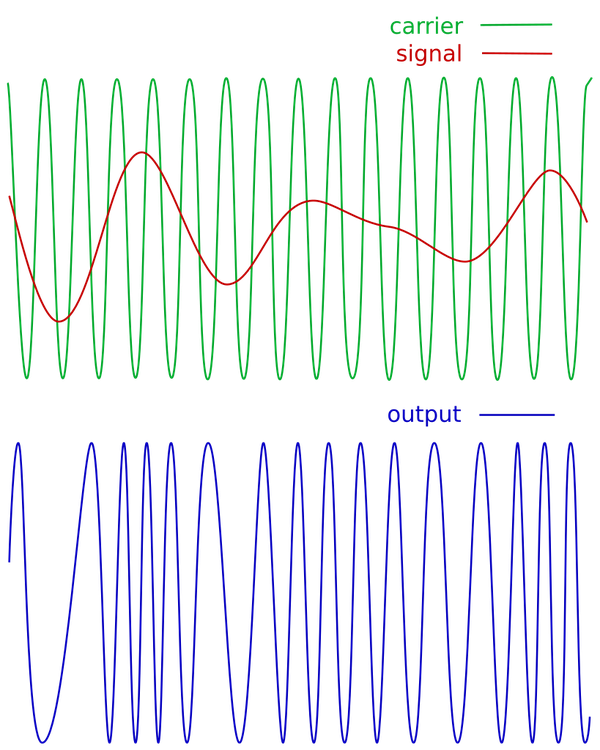

Further complicating things, teletext proper was not the only contender. There was also videotex. The terminology has become somewhat confused, but I will stick to the nomenclature used in the 1980s: teletext services used a continuous one-way broadcast of every page and decoders simply displayed the requested page when it came around in the loop. Videotex systems were two-way, with the customer using a phone line to request a specific page which was then sent on-demand. Videotex systems tended to operate over telephone lines rather than television cable, but were frequently integrated into television sets. Videotex is not as well remembered as teletext because it was a massive commercial failure, with the very notable exception of the French Minitel.

But in the '80s they didn't know that yet, and the UK had its own videotex venture called Prestel. Prestel had the backing of the Post Office, because they ran the telephones and thus stood to make a lot of money off of it. For the exact same reason, US telephone company GTE bought the rights to the system in the US.

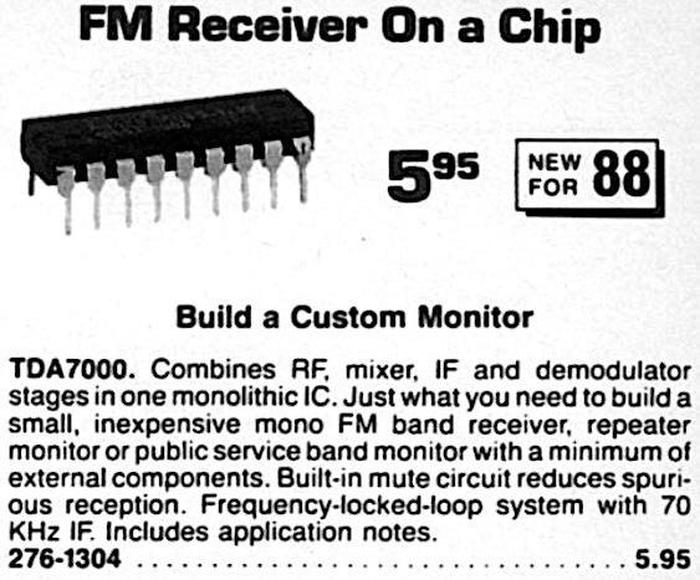

Videotex is significantly closer to "the internet" in its concept than teletext, and GTE was entering a competitive market. In 1981, Radio Shack had introduced a videotex terminal for several years already, a machine originally developed as the "AgVision" for use with an experimental Kentucky agricultural videotex service and then offered nationwide. This creates an amusing irony: teletext services existed but it was very difficult to obtain a decoder to use them. Radio Shack was selling a videotex client nationwide, but what service would you use it with? In practice, the "TRS-80 Videotex" as the AgVision came to be known was used mostly as a client for CompuServe and Dow Jones. Neither of these were actually videotex services, using neither the videotex UX model nor the videotex-specific features of the machine. The TRS-80 Videotex was reduced to just a slightly weird terminal with a telephone modem, and never sold well until Radio Shack beefed it up into a complete microcomputer and relaunched it as the TRS-80 Color Computer.

Radio Shack also sold a backend videotex system, and apparently some newspapers bought it in an effort to launch a "digital edition." The only one to achieve long-term success seems to have been StarText, a service of the Fort Worth Star-Telegram. It was popular enough to be remembered by many from the Fort Worth area, but there was little national impact. It was clearly not enough to float sales of the TRS-80 Videotex and the whole thing has been forgotten. Well, with such a promising market, GTE brought its US Prestel service to market in 1982. As the TRS-80 dropped its Videotex ambitions, Zenith launched a US television set with a built-in Prestel client.

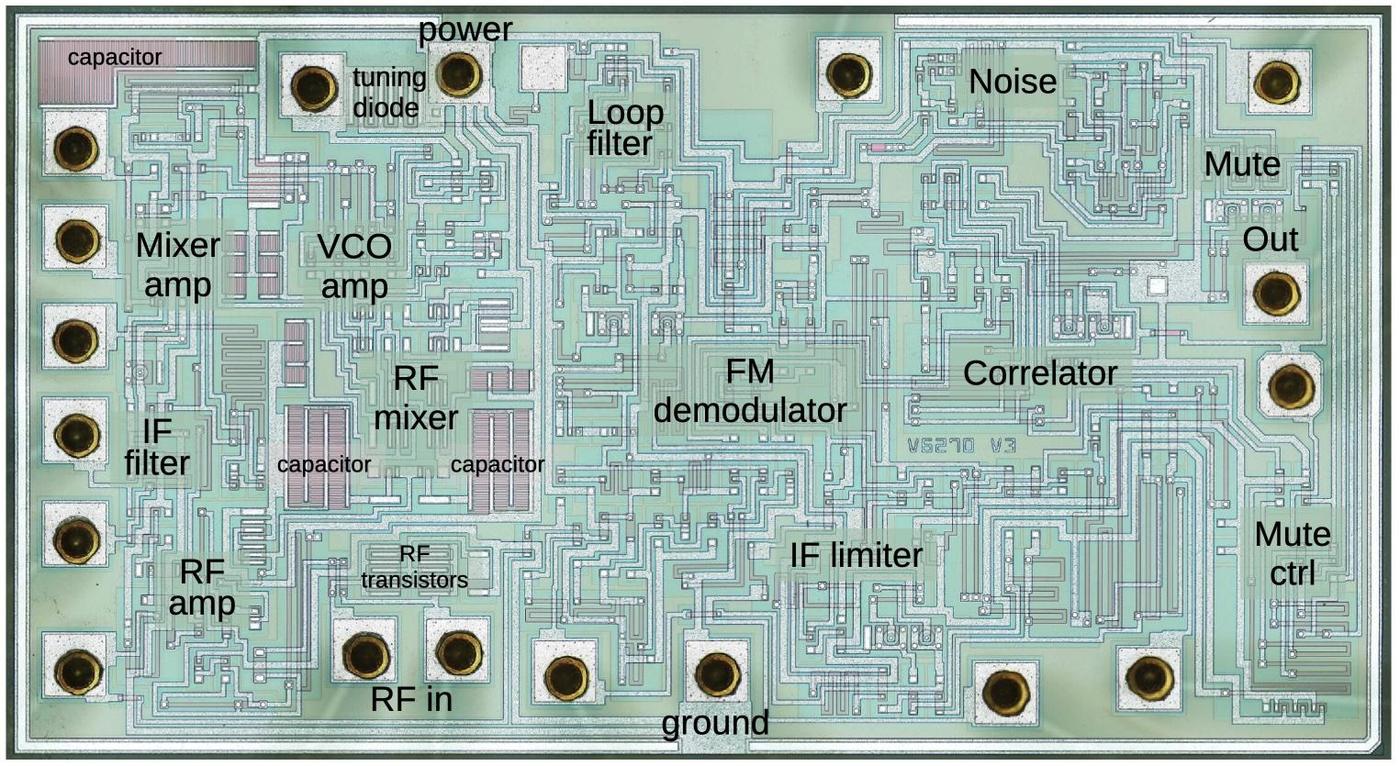

Prestel wasn't the only videotex operation, and GTE wasn't the only company marketing videotex in the US. If the British Post Office and GTE thought they could make money off of something, you know AT&T was somewhere around. They were, and in classic AT&T fashion. During the 1970s, the Canadian Communications Research Center developed a vector-based drawing system. Ontario manufacturer Norpak developed a consumer terminal that could request full-color pages from this system using a videotex-like protocol. Based on the model of Ceefax, the CRC designed a system called Telidon that worked over television (in a more teletext-like fashion) or phone lines (like videotex), with the capability of publishing far more detailed graphics than the simple box drawings of teletext.

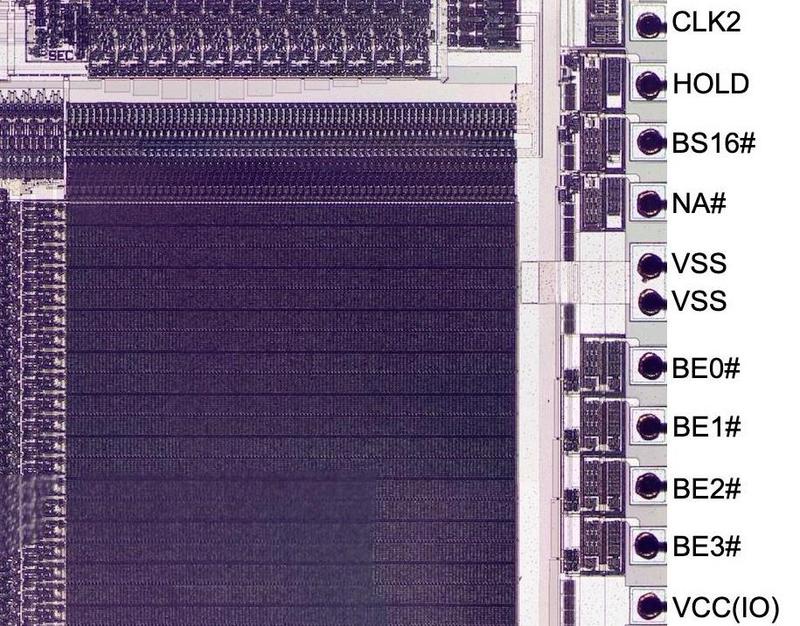

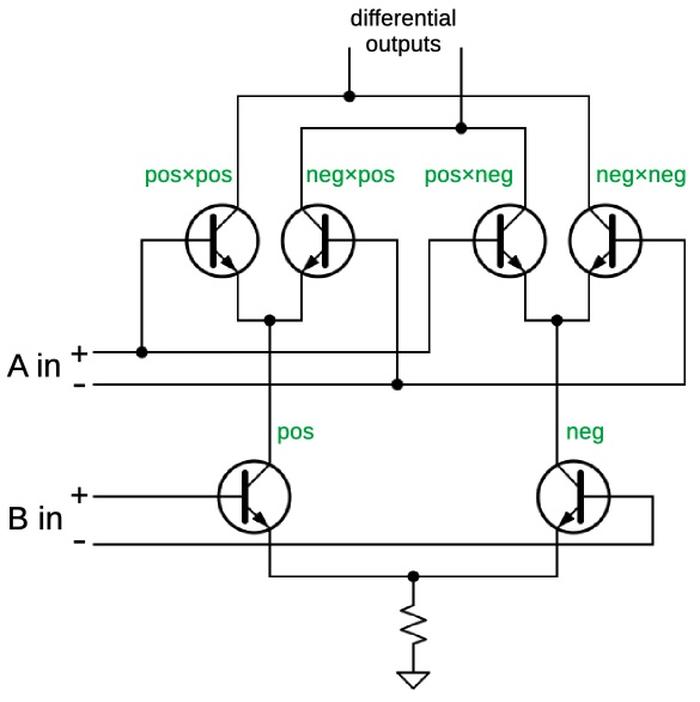

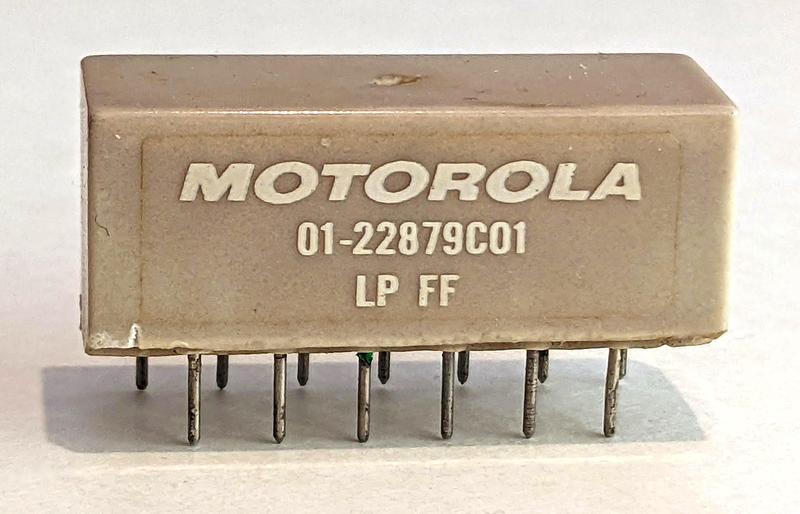

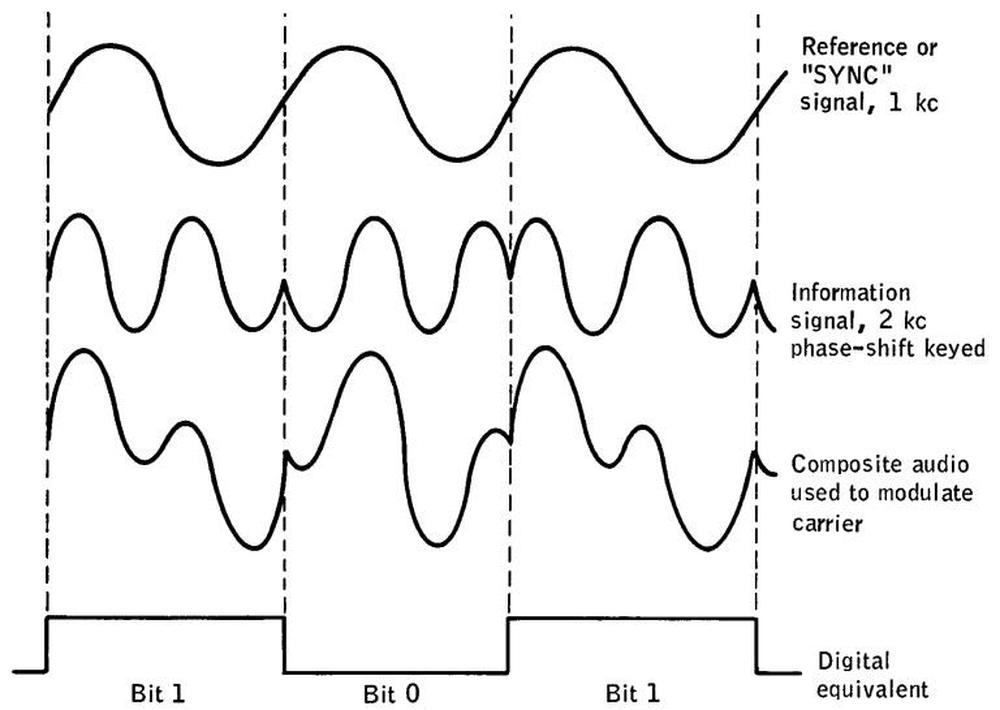

Telidon had several cool aspects, like the use of a pretty complicated vector-drawing terminal and a flexible protocol designed for interoperability between different communications media. That's the kind of thing AT&T loved, so they joined the effort. With CRC, AT&T developed NABTS, the North American Broadcast Teletext Specification---based on Telidon and intended for one-way broadcast over TV networks.

NABTS was complex and expensive compared to Ceefax/ORACLE/WST based systems. A review of KSL's pilot notes how the $40,000 budget for their origination system compared to the cost quoted by AT&T for an NABTS headend: as much as $2 million. While KSL's estimates of $25 for a teletext decoder had not been achieved, the prototypes were still running cheaper than NABTS clients that ran into the hundreds. Still, the graphical capabilities of NABTS were immediately impressive compared to text-only services. Besides, the extensibility of NABTS onto telephone systems, where pages could be delivered on-demand, made it capable of far larger databases.

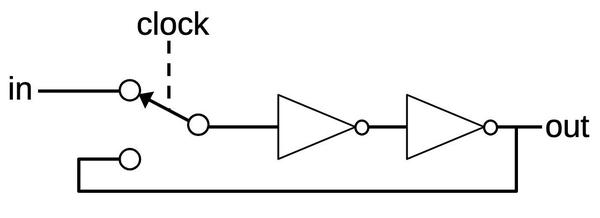

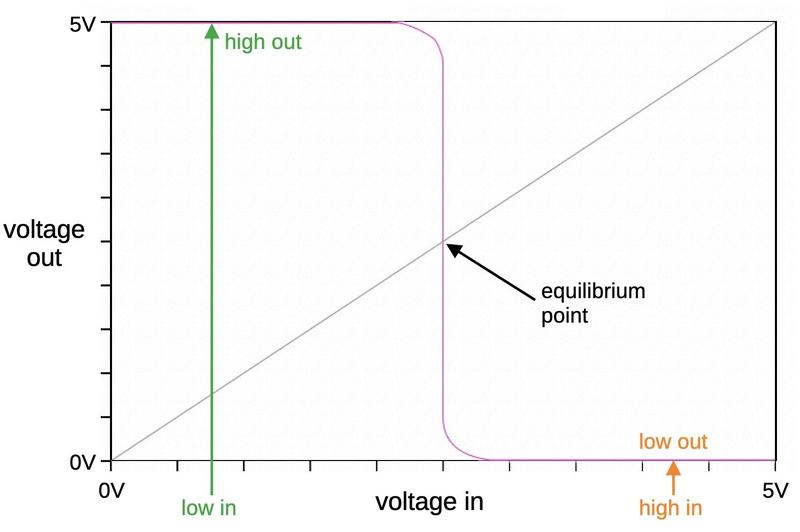

When KSL first introduced teletext, they spoke of a scheme where a customer could call a phone number and, via DTMF menus, request an "extended" page beyond the 120 normally transmitted. They could then request that page on their teletext decoder and, at the end of the normal 120 page loop, it would be sent just for them. I'm not sure if that was ever implemented or just a concept. In any case, videotex systems could function this way natively, with pages requested and retrieved entirely by telephone modem, or using hybrid approaches.

NABTS won the support of NBC, who launched a pilot NABTS service (confusingly called NBC Teletext) in 1981 and went into full service in 1983. CBS wasn't going to be left behind, and trialed and launched NABTS (as CBS ExtraVision) at the same time. That was an ignominious end for CBS's actual teletext pilot, which quietly shut down without ever having gone into full service. ExtraVision and NBC Teletext are probably the first US interactive TV services that consumers could actually buy and use.

Teletext was not dead, though. In 1982, Cincinnati station WKRC ran test broadcasts for a WST-based teletext service called Electra. WKRC's parent company, Taft, partnered with Zenith to develop a real US-market consumer WST decoder for use with the Electra service. In 1983, the same year that ExtraVision and CBS Teletext went live, Zenith teletext decoders appeared on the shelves of Cincinnati stores. They were plug-in modules for recent Zenith televisions, meaning that customers would likely also need to buy a whole new TV to use the service... but it was the only option, and seems to have remained that way for the life of US teletext.

I believe that Taft's Electra was the first teletext service to achieve a regular broadcast license. Through the mid 1980s, Electra would expand to more television stations, reaching similar penetration to the videotex services. In 1982, KeyFax (remember KeyFax? it was the one on WFLD in Chicago) had made the pivot from teletext to videotex as well, adopting the Prestel-derived technology from GTE. In 1984, KeyFax gave up on their broadcast television component and became a telephone modem service only. Electra jumped on the now-free VBI lines of WFLD and launched in Chicago. WTBS in Atlanta carried Electra, and then in the biggest expansion of teletext, Electra appeared on SPN---a satellite network that would later become CNBC.

While major networks, and major companies like GTE and AT&T, pushed for the videotex NABTS, teletext continued to have its supporters among independent stations. Los Angeles's KTTV started its own teletext service in 1984, which combined locally-developed pages with national news syndicated from Electra. This seemed like the start of a promising model for teletext across independent stations, but it wasn't often repeated.

Oh, and KSL? at some point, uncertain to me but before 1984, they switched to NABTS.

Let's stop for a moment and recap the situation. Between about 1978 and 1984, over a dozen major US television stations launched interactive TV offerings using four major protocols that fell into two general categories. One of those categories was one-way over television while the other was two-way over telephone or one-way over television with some operators offering both. Several TV stations switched between types. The largest telcos and TV networks favored one option, but it was significantly more expensive than the other, leading smaller operators to choose differently. The hardware situation was surprisingly straightforward in that, within teletext and videotex, consumers only had one option and it was very expensive.

Oh, and that's just the technical aspects. The business arrangements could get even stranger. Teletext services were generally free, but videotex services often charged a service fee. This was universally true for videotex services offered over telephone and often, but not always, true for videotex services over cable. Were the videotex services over cable even videotex? doesn't that contradict the definition I gave earlier? is that why NBC called their videotex service teletext? And isn't videotex over telephone barely differentiated from computer-based services like CompuServe and The Source that were gaining traction at the same time?

I think this all explains the failure of interactive TV in the 1980s. As you've seen, it's not that no one tried. It's that everyone tried, and they were all tripping over each other the entire time. Even in Canada, where the government had sponsored development of the Telidon system ground-up to be a nationwide standard, the influence of US teletext services created similar confusion. For consumers, there were so many options that they didn't know what to buy, and besides, the price of the hardware was difficult to justify with the few stations that offered teletext. The fact that teletext had been hyped as the "next big thing" by newspapers since 1978, and only reached the market in 1983 as a shambled mess, surely did little for consumer confidence.

You might wonder: where was the FCC during this whole thing? In the US, we do not have a state broadcaster, but we do have state regulation of broadcast media that is really quite strict as to content and form. During the late '70s, under those first experimental licenses, the general perception seemed to be that the FCC was waiting for broadcasters to evaluate the different options before selecting a nationwide standard. Given that the FCC had previously dictated standards for television receivers, it didn't seem like that far of a stretch to think that a national-standard teletext decoder might become mandatory equipment on new televisions.

Well, it was political. The long, odd experimental period from 1978 to 1983 was basically a result of FCC indecision. The commission wasn't prepared to approve anything as a national standard, but the lack of approval meant that broadcasters weren't really allowed to use anything outside of limited experimental programs. One assumes that they were being aggressively lobbied by every side of the debate, which no doubt factored into the FCC's 1981 decision that teletext content would be unregulated, and 1982 statements from commissioners suggesting that the FCC would not, in fact, adopt any technical standards for teletext.

There is another factor wrapped up in this whole story, another tumultuous effort to deliver text over television: closed captioning. PBS introduced closed captioning in 1980, transmitting text over line 21 of the VBI for decoding by a set-top box. There are meaningful technical similarities between closed captioning and teletext, to the extent that the two became competitors. Some broadcasters that added NABTS dropped closed captioning because of incompatibility between the equipment in use. This doesn't seem to have been a real technical constraint, and was perhaps more likely cover for a cost-savings decision, but it generated considerable controversy that lead to the National Association for the Deaf organizing for closed captioning and against teletext.

The topic of closed captioning continued to haunt interactive TV. TV networks tended to view teletext or videotex as the obvious replacements for line 21 closed captioning, due to their more sophisticated technical features. Of course, the problems that limited interactive TV adoption in general, high cost and fragmentation, made it unappealing to the deaf. Closed captioning had only just barely become well-standardized in the mid-1980s and its users were not keen to give it up for another decade of frustration. While some deaf groups did support NABTS, the industry still set up a conflict between closed captioning and interactive TV that must have contributed to the FCC's cold feet.

In April of 1983, at the dawn of US broadcast teletext, the FCC voted 6-1 to allow television networks and equipment manufacturers to support any teletext or videotex protocol of their choice. At the same time, they declined to require cable networks to carry teletext content from broadcast television stations, making it more difficult for any TV network to achieve widespread adoption [3]. The FCC adopted what was often termed a "market-based" solution to the question of interactive TV.

The market would not provide that solution. It had already failed.

In November of 1983, Time ended their teletext service. That's right, Time used to have a TV network and it used to have teletext; it was actually one of the first on the market. It was also the first to fall, but they had company. CBS and NBC had significantly scaled back their NABTS programs, which were failing to make any money because of the lack of hardware that could decode the service.

On the WST side of the industry, Taft reported poor adoption of Electra and Zenith reported that they had sold very few decoders, so few that they were considering ending the product line. Taft was having a hard time anyway, going through a rough reorganization in 1986 that seems to have eliminated most of the budget for Electra. Electra actually seems to have still been operational in 1992, an impressive lifespan, but it says something about the level of adoption that we have to speculate as to the time of death. Interactive TV services had so little adoption that they ended unnoticed, and by 1990, almost none remained.

Conflict with closed captioning still haunted teletext. There had been some efforts towards integrating teletext decoders into TV sets, by Zenith for example, but in 1990 line 21 closed caption decoding became mandatory. The added cost of a closed captioning decoder, and the similarity to teletext, seems to have been enough for the manufacturing industry to decide that teletext had lost the fight. Few, possibly no teletext decoders were commercially available after that date.

In Canada, Telidon met a similar fate. Most Telidon services were gone by 1986, and it seems likely that none were ever profitable. On the other hand, the government-sponsored, open-standards nature of Telidon mean that it and descendants like NABTS saw a number of enduring niche uses. Environment Canada distributed weather data via a dedicated Telidon network, and Transport Canada installed Telidon terminals in airports to distribute real-time advisories. Overall, the Telidon project is widely considered a failure, but it has had enduring impact. The original vector drawing language, the idea that had started the whole thing, came to be known as NAPLPS, the North American Presentation Layer Protocol Syntax. NAPLPS had some conceptual similarities to HTML, as Telidon's concept of interlinking did to the World Wide Web. That similarity wasn't just theoretical: Prodigy, the second largest information service after CompuServe and first to introduce a GUI, ran on NAPLPS. Prodigy is now viewed as an important precursor to the internet, but seen in a different light, it was just another videotex---but one that actually found success.

I know that there are entire branches of North American teletext and videotex and interactive TV services that I did not address in this article, and I've become confused enough in the timeline and details that I'm sure at least one thing above is outright wrong. But that kind of makes the point, doesn't it? The thing about teletext here is that we tried, we really tried, but we badly fumbled it. Even if the internet hadn't happened, I'm skeptical that interactive television efforts would have gotten anywhere without a complete fresh start. And the internet did happen, so abruptly that it nearly killed the whole concept while television carriers were still tossing it around.

Nearly killed... but not quite. Even at the beginning of the internet age, televisions were still more widespread than computers. In fact, from a TV point of view, wasn't the internet a tremendous opportunity? Internet technology and more compact computers could enable more sophisticated interactive television services at lower prices. At least, that's what a lot of people thought. I've written before about Cablesoft and it is just one small part of an entire 1990s renaissance of interactive TV. There's a few major 1980s-era services that I didn't get to here either. Stick around and you'll hear more.

You know what's sort of funny? Remember the AgVision, the first form of the TRS-80? It was built as a client for AGTEXT, a joint project of Kentucky Educational Television (who carried it on the VBI of their television network) and the Kentucky College of Agriculture. At some point, AGTEXT switched over to the line 21 closed captioning protocol and operated until 1998. It was almost the first teletext service, and it may very well have been the last.

[1] There's this weird thing going on where I keep tangentially bringing up Bonnevilles. I think it's just a coincidence of what order I picked topics off of my list but maybe it reflects some underlying truth about the way my brain works. This Bonneville, Bonneville International, is a subsidiary of the LDS Church that owns television and radio stations. It is unrelated, except by being indirectly named after the same person, to the Bonneville Power Administration that operated an early large-area microwave communications network.

[2] There were of course large TV networks in the US, and they will factor into the story later, but they still relied on a network of independent but affiliated stations to reach their actual audience---which meant a degree of technical inconsistency that made it hard to rollout nationwide VBI services. Providing another hint at how centralization vs. decentralization affected these datacasting services, adoption of new datacasting technologies in the US has often been highest among PBS and NPR affiliates, our closest equivalents to something like the BBC or ITV.

[3] The regulatory relationship between broadcast TV stations, cable network TV stations, and cable carriers is a complex one. The FCC's role in refereeing the competition between these different parts of the television industry, which are all generally trying to kill each other off, has lead to many odd details of US television regulation and some of the everyday weirdness of the American TV experience. It's also another area where the US television industry stands in contrast to the European television industry, where state-owned or state-chartered broadcasting meant that the slate of channels available to a consumer was generally the same regardless of how they physically received them. Not so in the US! This whole thing will probably get its own article one day.

Their goals are opposite, their people are opposite, their tactics are opposite. Even their credos - developers "Make a good product" but sales will "Do anything to get that money" - are at complete odds.

Their goals are opposite, their people are opposite, their tactics are opposite. Even their credos - developers "Make a good product" but sales will "Do anything to get that money" - are at complete odds.

︎

︎