Web Perf Hero: Máté Szabó

MediaWiki is the platform that powers Wikipedia and other Wikimedia projects. There is a lot of traffic to these sites. We want to serve our audience in a way that they get the best experience and performance possible. So efficiency of the MediaWiki platform is of great importance to us and our readers.

MediaWiki is a relatively large application with 645,000 lines of PHP code in 4,600 PHP files, and growing! (Reported by cloc.) When you have as much traffic as Wikipedia, working on such a project can create interesting problems.

MediaWiki uses an “autoloader” to find and import classes from PHP files into memory. In PHP, this happens on every single request, as each request gets its own process. In 2017, we introduced support for loading classes from PSR-4 namespace directories (in MediaWiki 1.31). This mechanism involves checking which directory contains a given class definition.

Problem statement

Kunal (@Legoktm) noticed after MediaWiki 1.35, wikis became slower due to spending more time in fstat system calls. Syscalls make a program switch to kernel mode, which is expensive.

We learned that our Autoloader was the one doing the fstat calls, to check file existence. The logic powers the PSR-4 namespace feature, and actually existed before MediaWiki 1.35. But, it only became noticeable after we introduced the HookRunner system, which loaded over 500 new PHP interfaces via the PSR-4 mechanism.

MediaWiki’s Autoloader has a class map array that maps class names to their file paths on disk. PSR-4 classes do not need to be present in this map. Before introducing HookRunner, very few classes in MediaWiki were loaded by PSR-4. The new hook files leveraged PSR-4, exposing many calls to file_exists() for PSR-4 directory searching, in every request. This adds up pretty quickly thereby degrading MediaWiki performance.

See task T274041 on Phabricator for the collaborative investigation between volunteers and staff.

Solution: Optimized class map

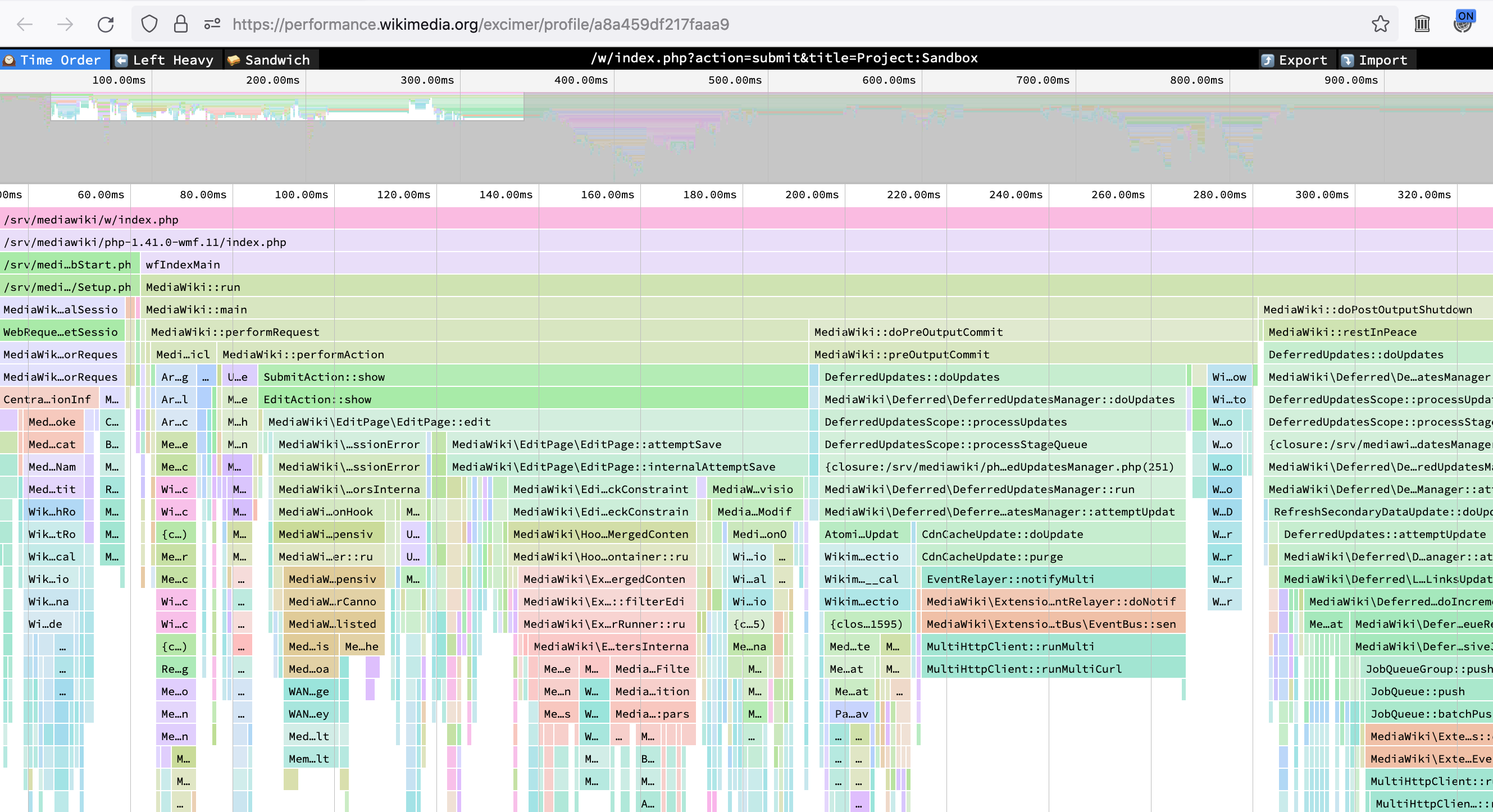

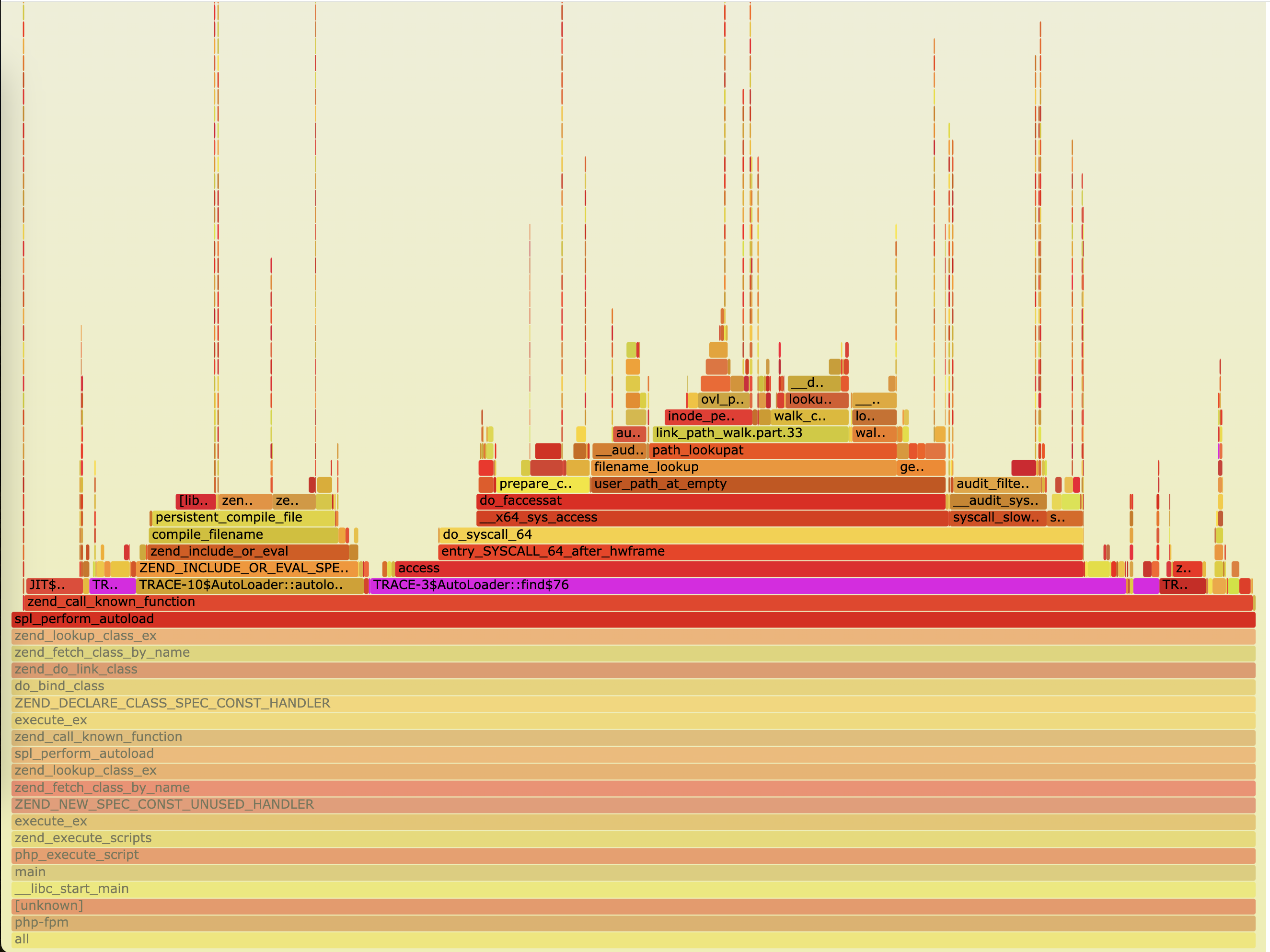

Máté Szabó (@TK-999) took a deep dive and profiled a local MediaWiki install with php-excimer and generated a flame graph. He found that about 16.6% of request time was spent in the Autoloader::find() method, which is responsible for finding which file contains a given class.

Checking for file existence during PSR-4 autoloading seems necessary because one namespace can correspond to multiple directories that promise to define some of its classes. The search logic has to check each directory until it finds a class file. Only when the class is not not found anywhere may the program crash with a fatal error.

Máté avoided the directory searching cost by expanding MediaWiki’s Autoloader class map to include all classes, including those registered via PSR-4 namespaces. This solution makes use of a hash-map, where each class maps to one and only one file path on disk, making it a 1-to-1 mapping.

This means, the Autoloader::find() method no longer has to search through the PSR-4 directories. It now knows upfront where each class is, by merely accessing the array from memory. This removes the need for file existence checks. This approach is similar to the autoloader optimization flag in Composer.

Impact

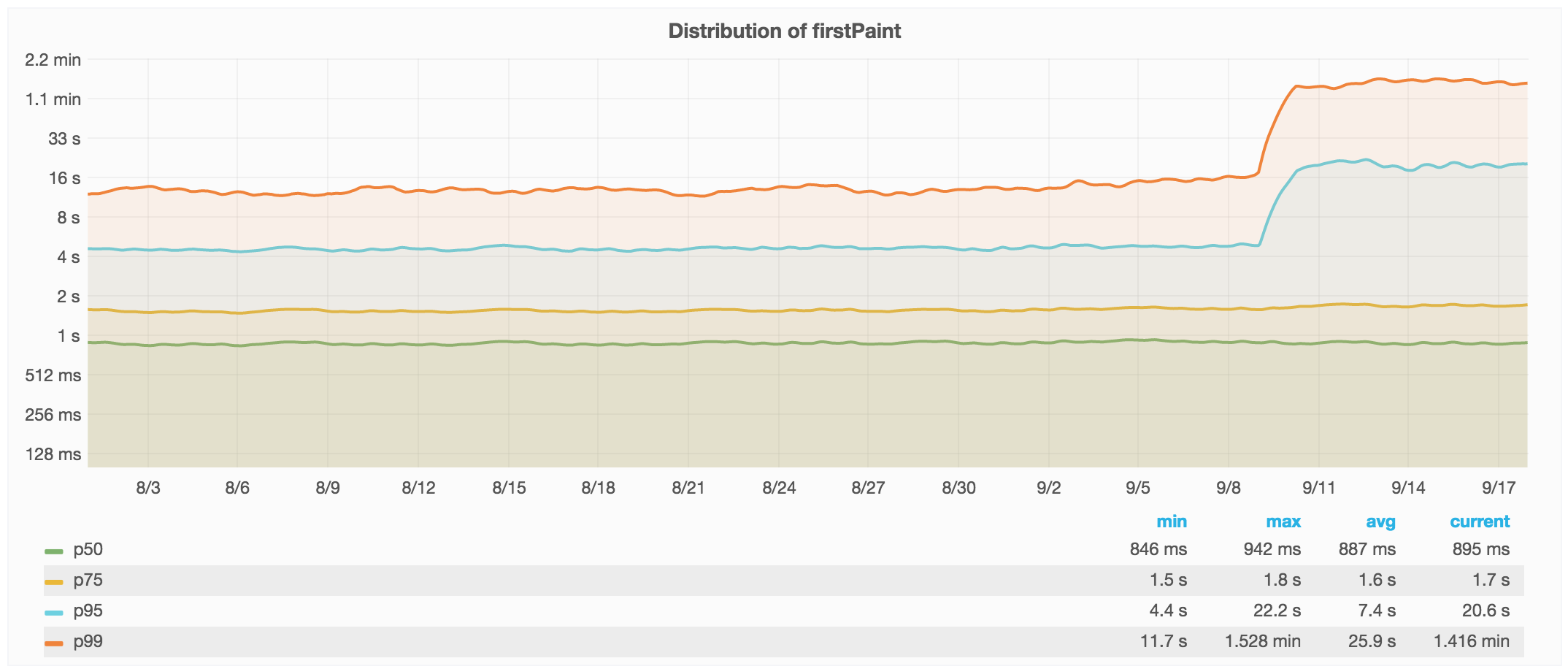

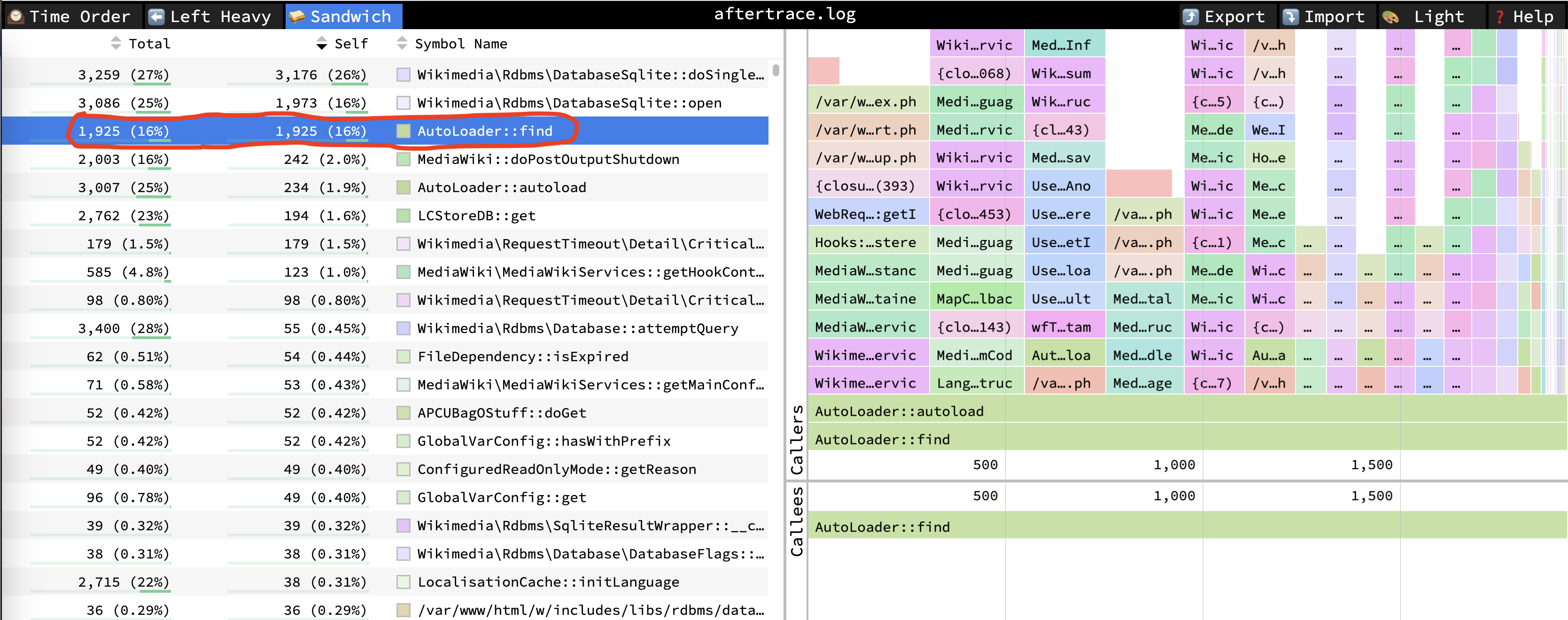

Máté’s optimization significantly reduced response time by optimizing the Autoloader::find() method. This is largely due to the elimination of file system calls.

After deploying the change to MediaWiki appservers in production, we saw a major shift in response times toward faster buckets: a ~20% increase in requests completed within 50ms, and a ~10% increase in requests served under 100ms (T274041#8379204).

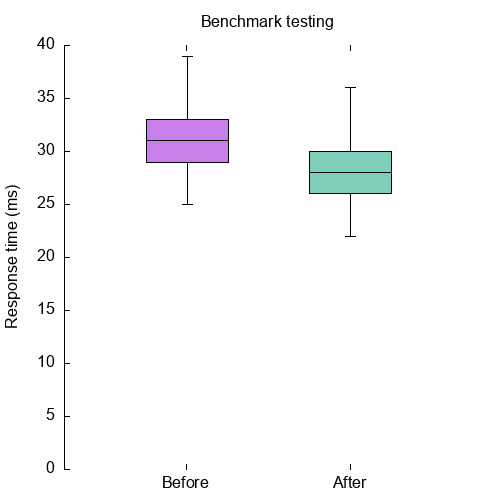

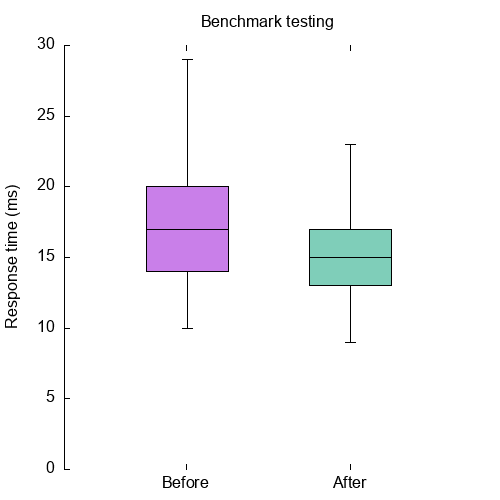

Máté analyzed the baseline and classmap cases locally, benchmarking 4800 requests, controlled at exactly 40 requests per second. He found latencies reduced on average by ~12%:

| Latencies | Baseline | Full classmap |

|---|---|---|

| p50 (mean average) | 26.2ms | 22.7ms (~13.3% faster) |

| p90 | 29.2ms | 25.7ms (~11.8% faster) |

| p95 | 31.1ms | 27.3ms (~12.3% faster) |

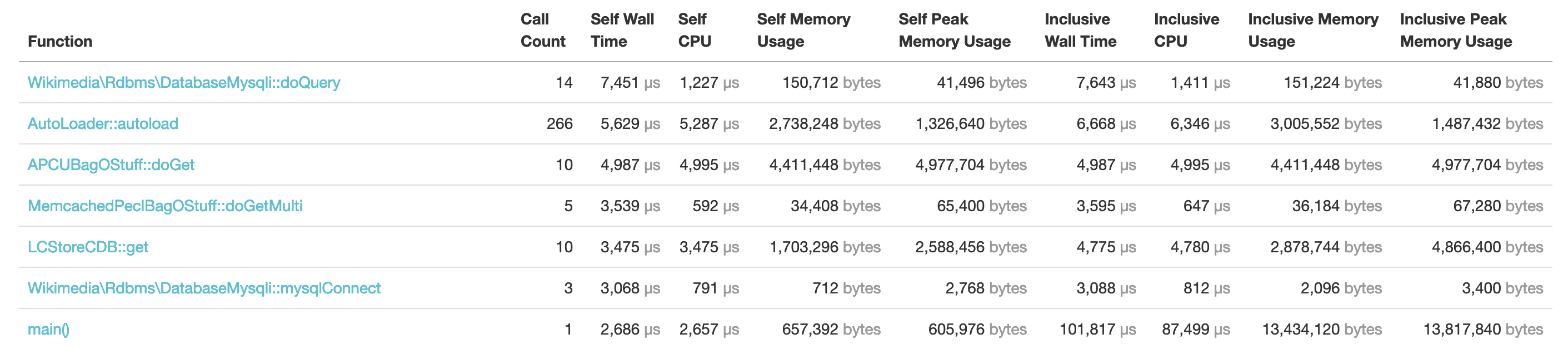

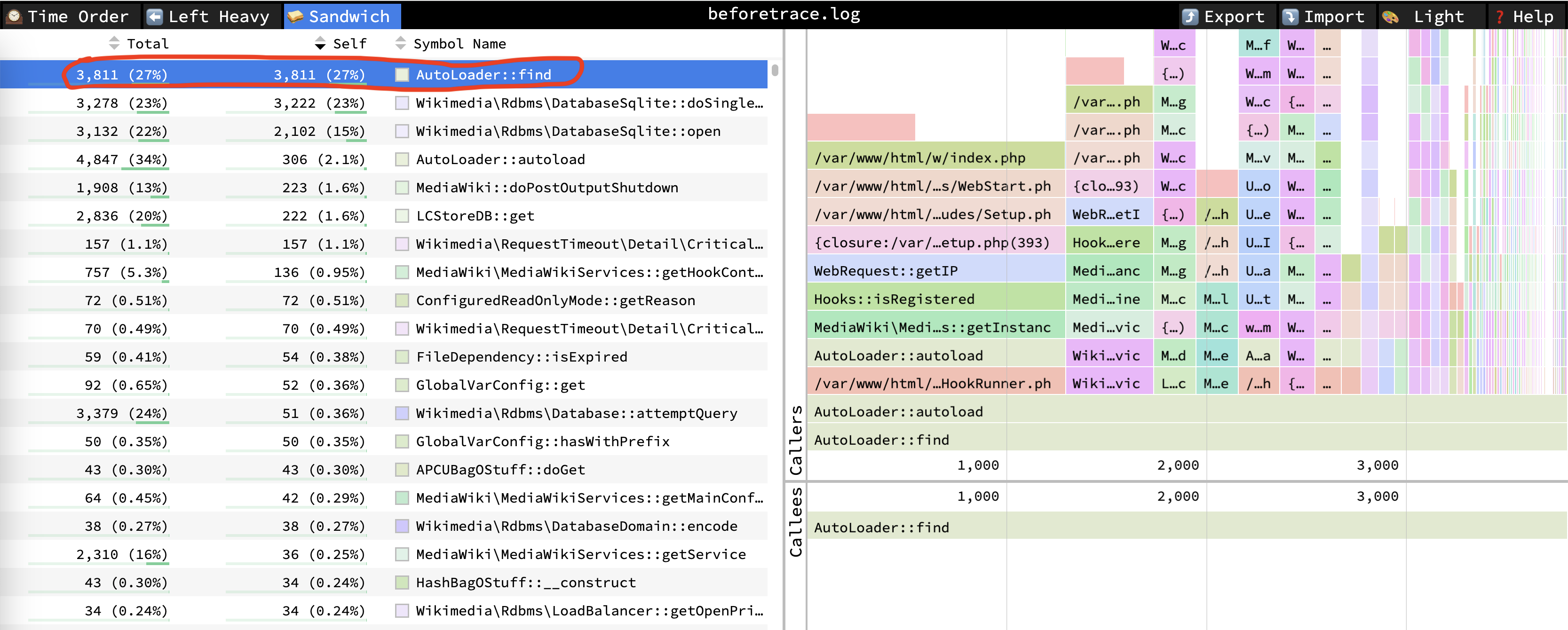

We reproduced Máté’s findings locally as well. On the Git commit right before his patch, Autoloader::find() really stands out.

NOTE: We used ApacheBench to load the /wiki/Main_Page URL from a local MediaWiki installation with PHP 8.1 on on Apple M1. We ran it both in a bare metal environment (PHP built-in webserver, 8 workers, no APCU), and in MediaWiki-Docker. We configured our benchmark to run 1000 requests with 7 concurrent requests. The profiles were captured using Excimer with a 1ms interval. The flame graphs were generated with Speedscope, and the box plots were created with Gnuplot.

In Figure 4 and 5, the “After” box plot has a lower median than the “Before” box plot. This means there is a reduction in latency. Also, the standard deviation in the “After” scenario shrunk, which indicates that responses were more consistently fast (not only on average). This increases the percentage of our users that have an experience very close to the average response time of web requests. Fewer users now experience an extreme case of web response slowness.

Web Perf Hero award

The Web Perf Hero award is given to individuals who have gone above and beyond to improve the web performance of Wikimedia projects. The initiative is led by the Performance Team and started mid-2020. It is awarded quarterly and takes the form of a Phabricator badge.

Read about past recipients at Web Perf Hero award on Wikitech.