Chasing solitons

Every once in a while, I dive into a topic in science for no reason other than that I find it interesting. This is how I learnt about Titan, laser-cooling, and random walks. This post is about the fourth topic in this series: solitons.

A soliton is a stable wave that maintains its shape and characteristics as it moves around. In 1834, a civil engineer named John Scott Russell spotted a single wave moving through the Edinburgh and Glasgow Union Canal in Scotland. He described it thus in a report to the British Association for the Advancement of Science in 1844 (pp. 319-320):

I was observing the motion of a boat which was rapidly drawn along a narrow channel by a pair of horses, when the boat suddenly stopped—not so the mass of water in the channel which it had put in motion; it accumulated round the prow of the vessel in a state of violent agitation, then suddenly leaving it behind, rolled forward with great velocity, assuming the form of a large solitary elevation, a rounded, smooth and well-defined heap of water, which continued its course along the channel apparently without change of form or diminution of speed. I followed it on horseback, and overtook it still rolling on at a rate of some eight or nine miles an hour [14 km/h], preserving its original figure some thirty feet [9 m] long and a foot to a foot and a half [30−45 cm] in height. Its height gradually diminished, and after a chase of one or two miles [2–3 km] I lost it in the windings of the channel.

Such, in the month of August 1834, was my first chance interview with that singular and beautiful phenomenon which I have called the Wave of Translation, a name which it now very generally bears; which I have since found to be an important element in almost every case of fluid resistance, and ascertained to be the type of that great moving elevation in the sea, which, with the regularity of a planet, ascends our rivers and rolls along our shores.

Russell was able to reproduce a similar wave in a water tank and study its properties. American physicists later called this wave a 'soliton' because of its solitary nature as well as to recall the name of particles like protons and electrons (to which waves are related by particle-wave duality).

Solitons are unusual in many ways. They are very stable, for one: Russell was able to follow his soliton for almost 3 km before it vanished completely. Solitons are able to collide with each other and still come away intact. There are types of solitons with still more peculiar properties.

These entities are not easy to find: they arise due to the confluence of unusual circumstances. For example, Russell's "wave of translation" was born when a boat moving in a canal suddenly stopped, pushing a single wave of in front that kept going. The top speed at which a wave can move on the surface of a water body is limited by the depth of the body. This is why a tsunami generated in the middle of the ocean can travel rapidly towards the shore, but as it gets closer and the water becomes shallower, it slows down. (Since it must also conserve energy, the kinetic energy it must shed goes into increasing its amplitude, so the tsunami becomes enormous when it strikes land.)

In fluid dynamics, the ratio of the speed of a vessel to the square root of the depth of the water it is moving in is called the Froude number. If the vessel was moving at the maximum speed of a wave in the Union Canal, the Froude number would have been 1.

If the Froude number had been 0.7, the vessel would have generated V-shaped pairs of waves about its prow, reminiscent of the common sight of a ship cutting through water.

Then the vessel started to speed up and its Froude number approached 1. This would have caused the waves generated off the sides to bend away from the prow and straighten at the front. This is the genesis of a soliton. Since the Union Canal has a fixed width, waves forming at the front of the vessel will have had fewer opportunities to dissipate and thus keep moving forward.

Since the boat stopped, it produced the single soliton that won Russell's attention. If it had kept moving, it would have produced a series of solitons in the water, and at the same have acquired a gentle up and down oscillating motion of its own as the Froude number exceeded 1.

Waves occur in a wide variety of contexts in the real world — and in the right conditions, scientists expect to find solitons in almost all of them. For example they have been spotted in optical fibres that carry light waves, in materials carrying a moving wave of magnetisation, and in water currents at the bottom of the ocean.

In the wave physics used to understand these various phenomena, a soliton is said to emerge as a solution to non-linear partial differential equations.

The behaviour of some systems can be described using partial differential equations. The plucked guitar string is a classic example. The string is fixed at both ends; when it is plucked, a wave travels along its length producing the characteristic sound. The corresponding equation goes like this: ∂2u/∂t2 = c2 • ∂u2/∂x2, where u is the string's displacement, x is where it was plucked, c is the maximum speed the wave can have, and t is of course the time lapsed.

The equation itself is not important. The point is that there's a left-hand side and a right-hand side, and one side can equal the other for different combinations of u, x, and t. Each such combination is called a solution. One particular solution is called the soliton when the corresponding wave meets three conditions: it's localised, preserves its shape and speed, and doesn't lose energy when interacting with other solitons.

The "non-linear" part of "non-linear partial differential equations" means that these equations describe ways whose properties that have different properties depending on their amplitude. The guitar string equation is an example of a linear system because u, the string's displacement, has a power of 1 (i.e. it isn't squared or cubed) nor are the other terms of the equation multiplied with each other. Another famous example of a non-linear partial differential equation is the Schrödinger equation, which describes how the wave function of a quantum system will change over time given a set of initial conditions.

(The Austrian-Irish physicist Erwin Schrödinger postulated it in 1925, which is one of the reasons the UN has designated our current year — a century later — the International Year of Quantum Science & Technology.)

An example of a non-linear partial differential equation is the Korteweg-de Vries equation, which predicts how waves behave in shallow water: ut + 6u • ux + uxxx = 0. The second term is the problem: ux is a way to write ∂u/∂x and since it is multiplied by 6u the equation is non-linear, i.e. a change in its character induces changes in itself that may also change its character.

But for better or for worse, this is the only milieu in which a soliton will emerge.

(If you're really interested: for example a soliton solution of the Korteweg-de Vries equation looks like this: u = A sech2 [ k (x – vt – x0) ], where A is the soliton's amplitude or maximum height, k is a term related to its width, x0 is its initial position, and vt is its velocity over time. 'sech' is the hyperbolic secant function.)

Physicists are more interested in particular types of soliton than others because they closely mimic specific phenomena in the real world. Sometimes it's a good idea to understand these phenomena as if they were solitons because the mathematics of the latter may be easier to work with. This lucid Institute of Physics video starring theoretical physicist David Tong sets out the quirky case of quarks.

I myself was more piqued by the Peregrine and breather solitons.

The Peregrine soliton isn't a soliton that travels. Its name comes from its discoverer, a British mathematician named Howell Peregrine. In fact, one of the things that distinguish a Peregrine soliton is that it's stuck in one place. More specifically it emerges from pre-existing waves, has a much greater amplitude than the background, and appears at and disappears from a single location in a blip.

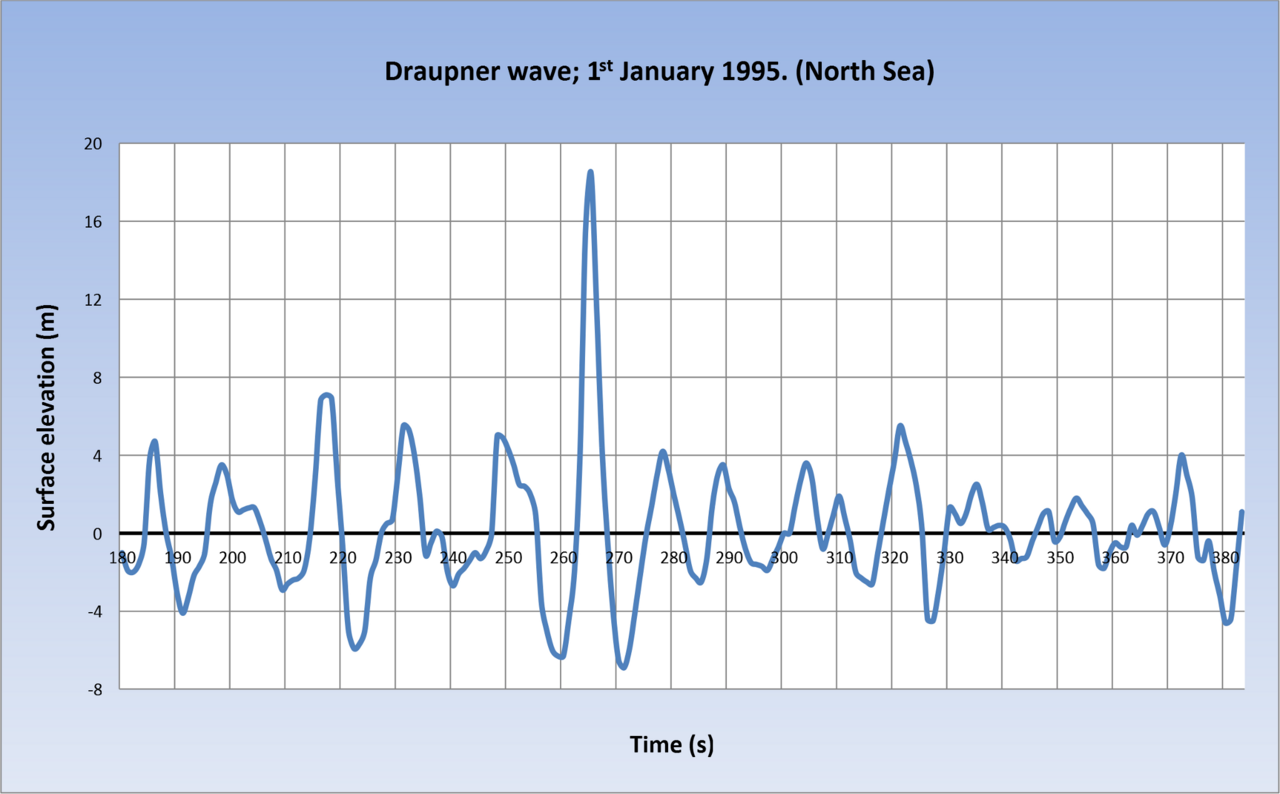

Peregrine solitons are interesting because they have been used to explain killer waves: freakish waves in the open sea that have no discernible cause and tower over all the other waves. One famous example is the Draupner wave, which was the first killer wave to also be measured by an instrument as it happened. It occurred on January 1, 1995, near the Draupner platform, a natural-gas rig in the Norwegian part of the North Sea. This is the wave's sounding chart:

That's one heck of a soliton.

The breather soliton is equally remarkable. It's a regular soliton that also has an oscillating amplitude, frequency or something else as it moves around. Imagine a breather soliton to be a soliton in water: it might look like a wave with an undulating shape, its surface heaving one moment and sagging the other like the head of a strange sea monster breathing as it glides along. This is exactly the spirit in which the breathing soliton was named.

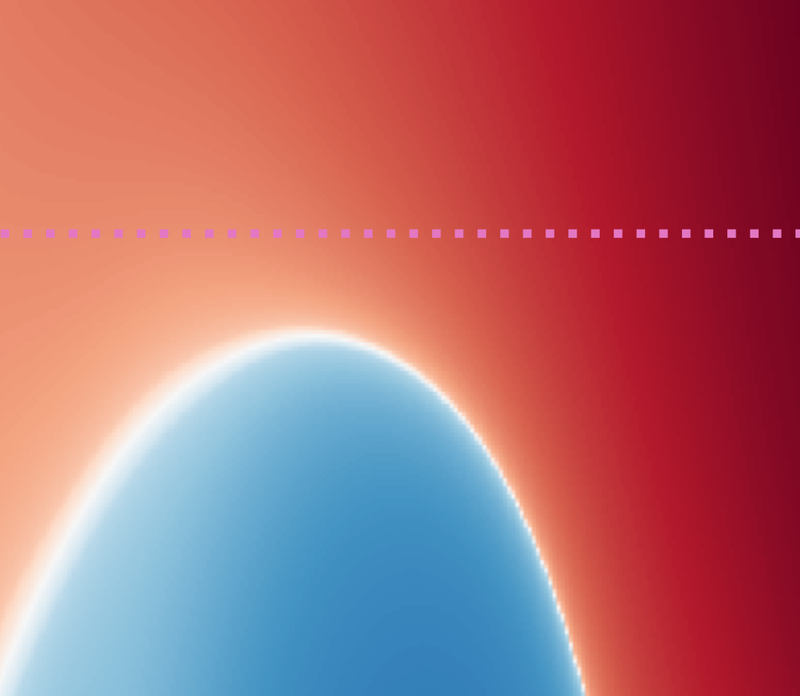

Here's an animation of a particular variety called the sine-Gordon breather soliton:

The Peregrine soliton is a particular instance of a breather soliton. Breathers have also been found in an exotic state of matter called a Bose-Einstein condensate (which physicists are studying with the expectation that it will inspire technologies of the future), in plasmas in outer space, in the operational parameters of short-pulse lasers, and in fibre optics. Some researchers also think entities analogous to breather solitons could help proteins inside the cells in our bodies transport energy.

If you're interested in jumping down this rabbit hole, you could also look up the Akhmediev and the Kuznetsov-Ma breathers.

At first blush, solitons seem like monastic wanderers of a world otherwise replete with waves travelling as if loath to be separated from another. Recall that one wave in 1834 gliding ever so placidly for over half a league, followed by a curious man on a horse galloping along the canal's bank. But for this venerable image, solitons are the children of a world far too sophisticated to admit waves crashing into each other with little more consequence than an enlivening spray of water and the formidable mathematics they demand to be understood.